Tesla CEO Elon Musk personally dictated language used in a company video which, critics charge, overstated the capabilities the capabilities of the company’s Autopilot driver-assistance system.

Autopilot, as well as the more advanced Full Self-Driving system, have come under intense fire in recent months from critics who contend they are not nearly as capable of hands-free driving as Tesla claims. They point to a growing list of crashes involving those technologies, a number of them fatal.

Such concerns have triggered various lawsuits and regulatory actions, including one where the California Department of Motor Vehicles could move to ban the automaker from selling vehicles in the state. The acting head of the National Highway Traffic Safety Administration, meanwhile, said this month that an investigation into Autopilot is being accelerated. It could lead to a massive recall. And both the U.S. Justice Department and the Securities and Exchange Commission are looking at whether Tesla intentionally made misleading statements that could results in fines or even criminal charges, Bloomberg reported last October.

Difference between “can” and “will”

In an overnight e-mail sent in October 2016, Musk wrote to the team developing Autopilot to stress the importance of showing what the technology is capable of, outlining a claim that, with Autopilot, Tesla vehicles would be capable of driving themselves.

“I will be telling the world that this is what the car *will* be able to do, not that it can do this,” Musk wrote the team, according to internal e-mails obtained by Bloomberg.

Tesla has repeatedly sent mixed signals about what Autopilot — and, more recently, FSD — is capable of. On its consumer website, the automaker says drivers must maintain at least a light grip on the steering wheel at all times with the earlier system.

But, critics contend, the automaker and its CEO have done little to counter public perception that Autopilot can be operated fully hands-free. Indeed, a widely circulated picture of Musk taken right after the technology debuted shows both the CEO and his former wife riding in the front seat of a Tesla EV waving both hands out the window.

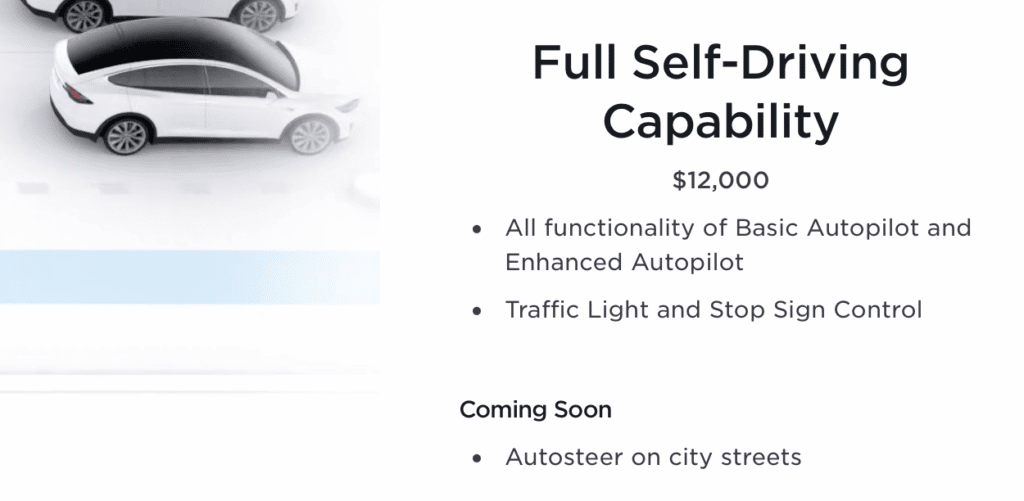

Since Autopilot debuted six years ago, Musk has repeatedly hyped its capabilities. And he has been equally effusive about the Full Self-Driving system that followed. He has repeatedly claim that complete autonomous capabilities are close at hand, repeatedly making the claim several times in 2022 that the necessary software updates would be released by year-end.

At the same time, Tesla doubled the price of FSD to $15,000.

Pushing the limits

Musk isn’t the only one to test the limits of the two technologies. The internet is full of videos showing motorists not just driving hands-free but, in some cases, climbing into the back seat or falling asleep while the car continued rolling.

But safety advocates and regulators have grown increasingly concerned as the number of incidents involving Autopilot and Full Self-Driving have increased. These include an early fatal crash in Florida in which a former Navy SEAL slammed into a semi-truck while watching videos as his Tesla operated in Autopilot mode.

NHTSA is now conducting a probe that could lead to the recall of more than 800,000 vehicles equipped with Autopilot.

Federal safety regulators are “working really fast” to resolve the investigation, acting NHTSA chief Ann Carson told reporters earlier this month. “We’re investing a lot of resources,” Carlson told reporters Jan. 9. The probe, she added, “require(s) a lot of technical expertise, actually some legal novelty and so we’re moving as quickly as we can, but we also want to be careful and make sure we have all the information we need.”

Autopilot and FSD are coming under scrutiny from a variety of different angles. Tesla has been sued by an owner essentially claiming he was sold technology that couldn’t do what was promised. The California Department of Motor Vehicles is looking into whether Tesla intentionally overstated the capabilities of its technology. And if it finds against Tesla the DMV could fine the automaker or even ban it from operating in the state.

The Justice Department and the Securities and Exchange Commission are each looking into whether Tesla knowingly misled not just car buyers but investors. That could result in fines and even criminal prosecution.

The revelation of Musk’s e-mail comments comes as one in a series of recent setbacks for the automaker.

The automaker attempted to have a court reject the September lawsuit filed by an owner on the grounds that its marketing was based on goals it had set for Autopilot. “Mere failure to realize a long-term, aspirational goal is not fraud,” it said in a Nov. 28 motion to dismiss. That move subsequently was rejected by the court.

In the newly published e-mails, Musk repeatedly reached out to the Autopilot team telling them how he wanted the video to look. Nine days after his first message, and after viewing a fourth version, he said it was still too choppy and “needs to feel like one continuous take.”

But what could be even more damning are comments made by Ashok Elluswamy, now the director of Autopilot software, in a lawsuit filed by the family of Walter Huang, a former Apple employee killed in a March 2018 crash involving the semi-autonomous technology.

Legal testimony

During a June 2022 deposition, Elluswamy said, “The intent of the video was not to accurately portray what was available for customers in 2016. “It was to portray what was possible.”

In fact, it came out, Tesla used high-definition maps to help operate the vehicle in the video, something not available to those driving retail vehicles with the Autopilot system.

For his part, Tesla CEO Musk continues to defend its semi-autonomous technologies. “Something that Tesla possesses that other automakers do not is that the car is upgradeable to autonomy,” Musk said during a Twitter Spaces event last month, according to Automotive News. “That’s something that no other car company can do.”

He has since faced further challenges over the technology, however. Earlier this month, Musk signaled Tesla might disable a system used to monitor driver behavior while using Autopilot on vehicles operated by those with a good driving history. The system is intended to make sure they continue to follow the rules, among other things continuing to focus on the road, rather than being distracted by texting or watching videos. NHTSA has advised the automaker that move would not be acceptable.

There’s the chance that Tesla’s semi-autonomous software systems could get a clean bill of health, and its approach to marketing seen as acceptable. On the over hand, with lawsuits, regulatory actions and even the possibility of criminal action hanging over its head, Tesla’s emphasis on Autopilot and the newer FSD could prove to be a serious liability.